Table of Links

3.2 Learning Residual Policies from Online Correction

3.3 An Integrated Deployment Framework and 3.4 Implementation Details

4.2 Quantitative Comparison on Four Assembly Tasks

4.3 Effectiveness in Addressing Different Sim-to-Real Gaps (Q4)

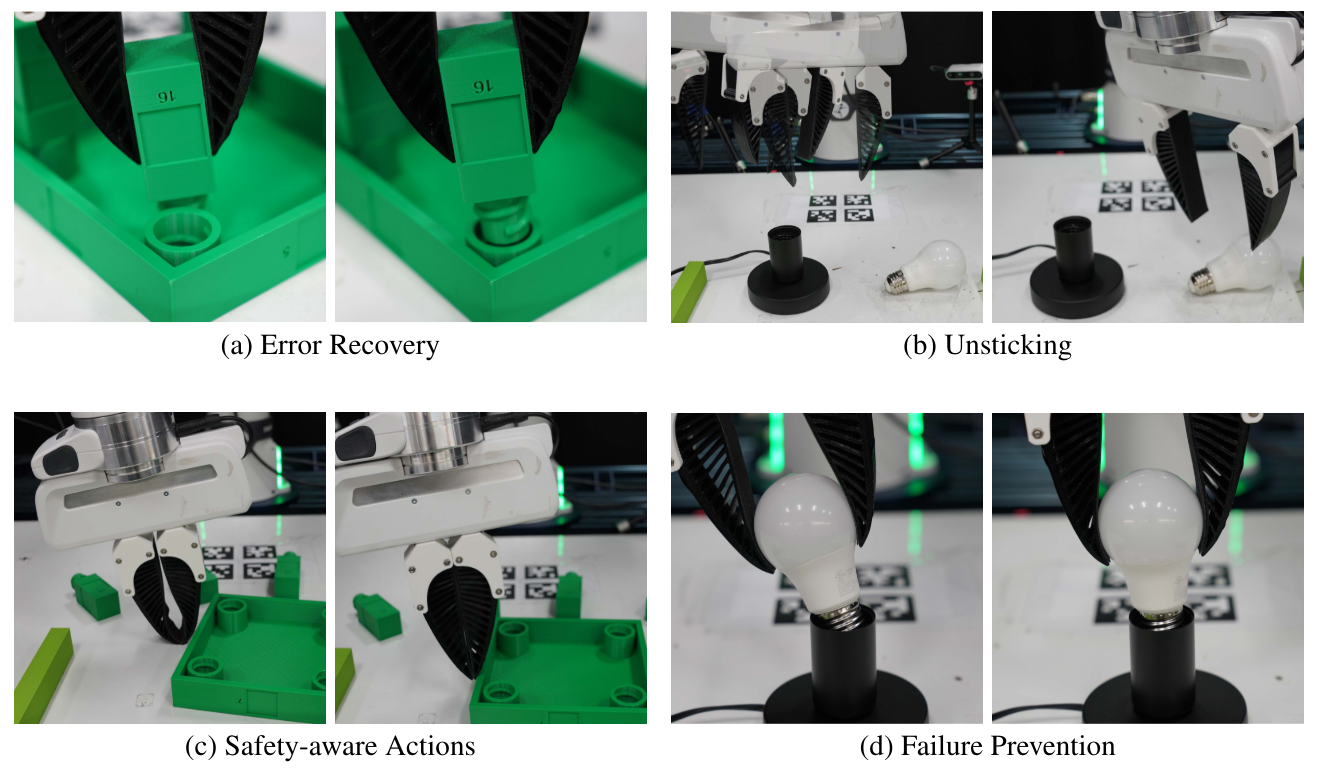

4.4 Scalability with Human Effort (Q5) and 4.5 Intriguing Properties and Emergent Behaviors (Q6)

6 Conclusion and Limitations, Acknowledgments, and References

A. Simulation Training Details

B. Real-World Learning Details

C. Experiment Settings and Evaluation Details

D. Additional Experiment Results

5 Related Work

Robot Learning via Sim-to-Real Transfer Physics-based simulations [7–11, 50, 93–95] have become a driving force [1, 2] for developing robotic skills in tabletop manipulation [96–99], mobile manipulation [100–103], fluid and deformable object manipulation [104–107], dexterous in-hand manipulation [14–18], locomotion with various robot morphology [19–27, 108], object tossing [83], acrobatic flight [29, 30], etc. However, the domain gap between the simulators and the reality is not negligible [11]. Successful sim-to-real transfer includes locomotion [19–28], in-hand re-orientation for dexterous hands where objects are initially placed near the robot [14–18], and non-prehensile manipulation limited to simple tasks [31–40]. In this work, we tackle more challenging sim-to-real transfer for complex manipulation tasks and successfully demonstrate that our approach can solve sophisticated contact-rich manipulation tasks. More importantly, it requires significantly fewer real

robot data compared to the prevalent imitation learning and offline RL approaches [68, 69, 72]. This makes solutions that are based on simulators and sim-to-real transfer more appealing to roboticists.

Sim-to-Real Gaps in Manipulation Tasks Despite the complex manipulation skills recently learned with RL in simulation [109], directly deploying learned control policies to physical robots often fails. The sim-to-real gaps [11, 41, 45, 110] that contribute to this performance discrepancy can be coarsely categorized as follows: a) perception gap [19, 42–44], where synthetic sensory observations differ from those measured in the real world; b) embodiment mismatch [19, 45, 46], where the robot models used in simulation do not match the real-world hardware precisely; c) controller inaccuracy [47–49], meaning that the results of deploying the same high-level commands (such as in configuration space [111] and task space [112]) differ in simulation and real hardware; and d) poor physical realism [50], where physical interactions such as contact and collision are poorly simulated [113].

Although these gaps may not be fully bridged, traditional methods to address them include system identification [19, 31, 51, 52], domain randomization [14, 53–55], real-world adaptation [56], and simulator augmentation [58–60]. However, system identification is mostly engineered on a case-bycase basis. Domain randomization suffers from the inability to identify and randomize all physical parameters. Methods with real-world adaptation, usually through meta-learning [114], incur potential safety concerns during the adaptation phase. Most of these approaches also rely on explicit and domain-specific knowledge about tasks and the simulator a priori. For instance, to perform system identification for closing the embodiment gap for a quadruped, Tan et al. [19] disassembles the physical robot and carefully calibrates parameters including size, mass, and inertia. Kim et al. [33] reports that collaborative robots, such as the commonly used Franka Emika robot, have intricate joint friction that is hard to identify and randomized in typical physics simulators. To make a simulator more akin to the real world, Chebotar et al. [40] deploys trained virtual robots multiple times to refine the distributions of simulation parameters. This procedure not only introduces a significant real-world sampling effort, but also incurs potential safety concerns due to deploying suboptimal policies. In contrast, our method leverages human intervention data to implicitly overcome the transferring problem in a domain-agnostic way and also leads to safer deployment.

![Figure 8: We demonstrate that individual skills learned with TRANSIC can be effectively chained together to enable long-horizon contact-rich manipulation. a) The robot assembles a lamp (160 seconds in 1× speed). b) The robot assembles a square table from FurnitureBench [90] (550 seconds in 1× speed). Videos are available on transic-robot.github.io.](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-1z934y4.png)

Human-in-The-Loop Robot Learning Human-in-the-loop machine learning is a prevalent framework to inject human knowledge into autonomous systems [62, 115, 116]. Various forms of human feedback exist [63], ranging from passive judgement, such as preference [117–126] and evaluation [127–132], to active involvement, including intervention [133–135] and correction [136, 137]. They are widely adopted in solutions for sequential decision-making tasks. For instance, interactive imitation learning [66, 67, 70, 138] leverages human intervention and correction to help na¨ıve imitators address data mismatch and compounding error. In the context of RL, reward functions can be derived to better align agent behaviors with human preferences [120, 123, 124, 127]. Noticeably, recent trend focuses on continually improving robots’ capability by iteratively updating and deploying policies with human feedback [70], combining active human involvement with RL [137], and autonomously generating corrective intervention data [139]. Our work further extends this trend by showing that sim-to-real gaps can be effectively eliminated by using human intervention and correction signals

In shared autonomy, robots and humans share the control authority to achieve a common goal [64, 65, 140–142]. This control paradigm has been largely studied in assistive robotics and human-robot collaboration [143–145]. In this work, we provide a novel perspective by employing it in sim-to-real transfer of robot control policies and demonstrating its importance in attaining effective transfer.

6 Conclusion and Limitations

In this work, we present TRANSIC, a holistic human-in-the-loop method to tackle sim-to-real transfer of policies for contact-rich manipulation tasks. We show that in sim-to-real transfer, a good base policy learned from the simulation can be combined with limited real-world data to achieve success. However, effectively utilizing human correction data to address the sim-to-real gap is challenging, especially when we want to prevent catastrophic forgetting of the base policy. TRANSIC successfully addresses these challenges by learning a gated residual policy from human correction data. We show that TRANSIC is effective as a holistic approach to address different types of sim-to-real gaps when presented simultaneously; it is also effective as an approach to address individual gaps of very different natures. It displays attractive properties, such as scaling with human effort.

While TRANSIC achieves remarkable sim-to-real transfer results, it still has several limitations which should be addressed by follow-up studies. 1) The current tasks are limited to the tabletop scenario with a soft parallel-jaw gripper. However, we notice that with the development of teleoperation devices for more complicated robots, such as bimanual arms [146], dexterous hands [147], mobile manipulators [148, 149], and humanoids [150], TRANSIC can also be potentially applied to these settings. 2) During the correction data collection phase, the human operator still manually decides when to intervene. This effort might be reduced by leveraging automatic failure detection techniques [151, 152]. 3) TRANSIC requires simulation policies with reasonable performances in the first place, which is challenging to learn by itself. Nevertheless, TRANSIC is compatible with and can benefit from recent advances in this direction [6, 85, 153].

Acknowledgments

We are grateful to Josiah Wong, Chengshu (Eric) Li, Weiyu Liu, Wenlong Huang, Stephen Tian, Sanjana Srivastava, and the SVL PAIR group for their helpful feedback and insightful discussions. This work is in part supported by the Stanford Institute for Human-Centered AI (HAI), ONR MURI N00014-22-1-2740, ONR MURI N00014-21-1-2801, and Schmidt Sciences. Ruohan Zhang is partially supported by Wu Tsai Human Performance Alliance Fellowship.

References

[1] H. Choi, C. Crump, C. Duriez, A. Elmquist, G. Hager, D. Han, F. Hearl, J. Hodgins, A. Jain, F. Leve, C. Li, F. Meier, D. Negrut, L. Righetti, A. Rodriguez, J. Tan, and J. Trinkle. On the use of simulation in robotics: Opportunities, challenges, and suggestions for moving forward. Proceedings of the National Academy of Sciences, 118(1):e1907856118, 2021. doi:10.1073/pnas.1907856118. URL https://www.pnas.org/doi/abs/10.1073/pnas. 1907856118.

[2] C. K. Liu and D. Negrut. The role of physics-based simulators in robotics. Annual Review of Control, Robotics, and Autonomous Systems, 4(1):35–58, 2021. doi:10.1146/annurev-control-072220-093055. URL https://doi.org/10.1146/ annurev-control-072220-093055.

[3] P. Abbeel and A. Y. Ng. Apprenticeship learning via inverse reinforcement learning. In Proceedings of the Twenty-First International Conference on Machine Learning, ICML ’04, page 1, New York, NY, USA, 2004. Association for Computing Machinery. ISBN 1581138385. doi:10.1145/1015330.1015430. URL https://doi.org/10.1145/ 1015330.1015430.

[4] H. D. III, J. Langford, and D. Marcu. Search-based structured prediction. arXiv preprint arXiv: Arxiv-0907.0786, 2009.

[5] S. Yang, O. Nachum, Y. Du, J. Wei, P. Abbeel, and D. Schuurmans. Foundation models for decision making: Problems, methods, and opportunities. arXiv preprint arXiv: Arxiv2303.04129, 2023.

[6] A. Mandlekar, S. Nasiriany, B. Wen, I. Akinola, Y. Narang, L. Fan, Y. Zhu, and D. Fox. Mimicgen: A data generation system for scalable robot learning using human demonstrations. arXiv preprint arXiv: Arxiv-2310.17596, 2023.

[7] E. Todorov, T. Erez, and Y. Tassa. Mujoco: A physics engine for model-based control. 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 5026–5033, 2012. doi:10.1109/IROS.2012.6386109.

[8] E. Coumans and Y. Bai. Pybullet, a python module for physics simulation for games, robotics and machine learning. http://pybullet.org, 2016–2021.

[9] Y. Zhu, J. Wong, A. Mandlekar, R. Mart´ın-Mart´ın, A. Joshi, S. Nasiriany, and Y. Zhu. robosuite: A modular simulation framework and benchmark for robot learning. arXiv preprint arXiv: Arxiv-2009.12293, 2020.

[10] V. Makoviychuk, L. Wawrzyniak, Y. Guo, M. Lu, K. Storey, M. Macklin, D. Hoeller, N. Rudin, A. Allshire, A. Handa, and G. State. Isaac gym: High performance gpu-based physics simulation for robot learning. arXiv preprint arXiv: Arxiv-2108.10470, 2021.

[11] C. Li, C. Gokmen, G. Levine, R. Mart´ın-Mart´ın, S. Srivastava, C. Wang, J. Wong, R. Zhang, M. Lingelbach, J. Sun, M. Anvari, M. Hwang, M. Sharma, A. Aydin, D. Bansal, S. Hunter, K.-Y. Kim, A. Lou, C. R. Matthews, I. Villa-Renteria, J. H. Tang, C. Tang, F. Xia, S. Savarese, H. Gweon, K. Liu, J. Wu, and L. Fei-Fei. BEHAVIOR-1k: A benchmark for embodied AI with 1,000 everyday activities and realistic simulation. In 6th Annual Conference on Robot Learning, 2022. URL https://openreview.net/forum?id=_8DoIe8G3t.

[12] A. Brohan, N. Brown, J. Carbajal, Y. Chebotar, J. Dabis, C. Finn, K. Gopalakrishnan, K. Hausman, A. Herzog, J. Hsu, J. Ibarz, B. Ichter, A. Irpan, T. Jackson, S. Jesmonth, N. J. Joshi, R. Julian, D. Kalashnikov, Y. Kuang, I. Leal, K.-H. Lee, S. Levine, Y. Lu, U. Malla, D. Manjunath, I. Mordatch, O. Nachum, C. Parada, J. Peralta, E. Perez, K. Pertsch, J. Quiambao, K. Rao, M. Ryoo, G. Salazar, P. Sanketi, K. Sayed, J. Singh, S. Sontakke, A. Stone, C. Tan, H. Tran, V. Vanhoucke, S. Vega, Q. Vuong, F. Xia, T. Xiao, P. Xu, S. Xu, T. Yu, and B. Zitkovich. Rt-1: Robotics transformer for real-world control at scale. arXiv preprint arXiv: Arxiv-2212.06817, 2022.

[13] K. Bousmalis, G. Vezzani, D. Rao, C. Devin, A. X. Lee, M. Bauza, T. Davchev, Y. Zhou, A. Gupta, A. Raju, A. Laurens, C. Fantacci, V. Dalibard, M. Zambelli, M. Martins, R. Pevceviciute, M. Blokzijl, M. Denil, N. Batchelor, T. Lampe, E. Parisotto, K. Zołna, S. Reed, S. G. ˙ Colmenarejo, J. Scholz, A. Abdolmaleki, O. Groth, J.-B. Regli, O. Sushkov, T. Rothorl, J. E. ¨ Chen, Y. Aytar, D. Barker, J. Ortiz, M. Riedmiller, J. T. Springenberg, R. Hadsell, F. Nori, and N. Heess. Robocat: A self-improving generalist agent for robotic manipulation. arXiv preprint arXiv: Arxiv-2306.11706, 2023.

[14] OpenAI, I. Akkaya, M. Andrychowicz, M. Chociej, M. Litwin, B. McGrew, A. Petron, A. Paino, M. Plappert, G. Powell, R. Ribas, J. Schneider, N. Tezak, J. Tworek, P. Welinder, L. Weng, Q. Yuan, W. Zaremba, and L. Zhang. Solving rubik’s cube with a robot hand. arXiv preprint arXiv: Arxiv-1910.07113, 2019.

[15] T. Chen, M. Tippur, S. Wu, V. Kumar, E. Adelson, and P. Agrawal. Visual dexterity: In-hand reorientation of novel and complex object shapes. Science Robotics, 8(84):eadc9244, 2023. doi:10.1126/scirobotics.adc9244. URL https://www.science.org/doi/abs/10.1126/ scirobotics.adc9244.

[16] Y. Chen, C. Wang, L. Fei-Fei, and C. K. Liu. Sequential dexterity: Chaining dexterous policies for long-horizon manipulation. arXiv preprint arXiv: Arxiv-2309.00987, 2023.

[17] H. Qi, A. Kumar, R. Calandra, Y. Ma, and J. Malik. In-hand object rotation via rapid motor adaptation. arXiv preprint arXiv: Arxiv-2210.04887, 2022.

[18] H. Qi, B. Yi, S. Suresh, M. Lambeta, Y. Ma, R. Calandra, and J. Malik. General in-hand object rotation with vision and touch. arXiv preprint arXiv: Arxiv-2309.09979, 2023.

[19] J. Tan, T. Zhang, E. Coumans, A. Iscen, Y. Bai, D. Hafner, S. Bohez, and V. Vanhoucke. Sim-to-real: Learning agile locomotion for quadruped robots. arXiv preprint arXiv: Arxiv1804.10332, 2018.

[20] A. Kumar, Z. Fu, D. Pathak, and J. Malik. RMA: rapid motor adaptation for legged robots. In D. A. Shell, M. Toussaint, and M. A. Hsieh, editors, Robotics: Science and Systems XVII, Virtual Event, July 12-16, 2021, 2021. doi:10.15607/RSS.2021.XVII.011. URL https: //doi.org/10.15607/RSS.2021.XVII.011.

[21] Z. Zhuang, Z. Fu, J. Wang, C. Atkeson, S. Schwertfeger, C. Finn, and H. Zhao. Robot parkour learning. arXiv preprint arXiv: Arxiv-2309.05665, 2023.

[22] R. Yang, G. Yang, and X. Wang. Neural volumetric memory for visual locomotion control. arXiv preprint arXiv: Arxiv-2304.01201, 2023.

[23] H. Benbrahim and J. A. Franklin. Biped dynamic walking using reinforcement learning. Robotics and Autonomous Systems, 22(3):283–302, 1997. ISSN 0921-8890. doi: https://doi.org/10.1016/S0921-8890(97)00043-2. URL https://www.sciencedirect. com/science/article/pii/S0921889097000432. Robot Learning: The New Wave.

[24] G. A. Castillo, B. Weng, W. Zhang, and A. Hereid. Reinforcement learning-based cascade motion policy design for robust 3d bipedal locomotion. IEEE Access, 10:20135–20148, 2022. doi:10.1109/ACCESS.2022.3151771.

[25] L. Krishna, G. A. Castillo, U. A. Mishra, A. Hereid, and S. Kolathaya. Linear policies are sufficient to realize robust bipedal walking on challenging terrains. arXiv preprint arXiv: Arxiv-2109.12665, 2021.

[26] J. Siekmann, K. Green, J. Warila, A. Fern, and J. Hurst. Blind bipedal stair traversal via sim-to-real reinforcement learning. arXiv preprint arXiv: Arxiv-2105.08328, 2021.

[27] I. Radosavovic, T. Xiao, B. Zhang, T. Darrell, J. Malik, and K. Sreenath. Real-world humanoid locomotion with reinforcement learning. arXiv preprint arXiv: Arxiv-2303.03381, 2023.

[28] Z. Li, X. B. Peng, P. Abbeel, S. Levine, G. Berseth, and K. Sreenath. Reinforcement learning for versatile, dynamic, and robust bipedal locomotion control. arXiv preprint arXiv: Arxiv2401.16889, 2024.

[29] E. Kaufmann, L. Bauersfeld, A. Loquercio, M. Muller, V. Koltun, and D. Scaramuzza. ¨ Champion-level drone racing using deep reinforcement learning. Nature, 2023. doi:10.1038/ s41586-023-06419-4. URL https://doi.org/10.1038/s41586-023-06419-4.

[30] Y. Song, A. Romero, M. Muller, V. Koltun, and D. Scaramuzza. Reaching the limit in au- ¨ tonomous racing: Optimal control versus reinforcement learning. Science Robotics, 8(82): eadg1462, 2023. doi:10.1126/scirobotics.adg1462. URL https://www.science.org/ doi/abs/10.1126/scirobotics.adg1462.

[31] V. Lim, H. Huang, L. Y. Chen, J. Wang, J. Ichnowski, D. Seita, M. Laskey, and K. Goldberg. Planar robot casting with real2sim2real self-supervised learning. arXiv preprint arXiv: Arxiv2111.04814, 2021.

[32] W. Zhou and D. Held. Learning to grasp the ungraspable with emergent extrinsic dexterity. In K. Liu, D. Kulic, and J. Ichnowski, editors, Conference on Robot Learning, CoRL 2022, 14-18 December 2022, Auckland, New Zealand, volume 205 of Proceedings of Machine Learning Research, pages 150–160. PMLR, 2022. URL https://proceedings.mlr.press/v205/ zhou23a.html.

[33] M. Kim, J. Han, J. Kim, and B. Kim. Pre- and post-contact policy decomposition for nonprehensile manipulation with zero-shot sim-to-real transfer. arXiv preprint arXiv: Arxiv2309.02754, 2023.

[34] X. Zhang, S. Jain, B. Huang, M. Tomizuka, and D. Romeres. Learning generalizable pivoting skills. In IEEE International Conference on Robotics and Automation, ICRA 2023, London, UK, May 29 - June 2, 2023, pages 5865–5871. IEEE, 2023. doi:10.1109/ICRA48891.2023. 10161271. URL https://doi.org/10.1109/ICRA48891.2023.10161271.

[35] S. Kozlovsky, E. Newman, and M. Zacksenhouse. Reinforcement learning of impedance policies for peg-in-hole tasks: Role of asymmetric matrices. IEEE Robotics and Automation Letters, 7(4):10898–10905, 2022. doi:10.1109/LRA.2022.3191070.

[36] D. Son, H. Yang, and D. Lee. Sim-to-real transfer of bolting tasks with tight tolerance. In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 9056–9063, 2020. doi:10.1109/IROS45743.2020.9341644.

[37] B. Tang, M. A. Lin, I. Akinola, A. Handa, G. S. Sukhatme, F. Ramos, D. Fox, and Y. S. Narang. Industreal: Transferring contact-rich assembly tasks from simulation to reality. In K. E. Bekris, K. Hauser, S. L. Herbert, and J. Yu, editors, Robotics: Science and Systems XIX, Daegu, Republic of Korea, July 10-14, 2023, 2023. doi:10.15607/RSS.2023.XIX.039. URL https://doi.org/10.15607/RSS.2023.XIX.039.

[38] X. Zhang, C. Wang, L. Sun, Z. Wu, X. Zhu, and M. Tomizuka. Efficient sim-to-real transfer of contact-rich manipulation skills with online admittance residual learning. In 7th Annual Conference on Robot Learning, 2023. URL https://openreview.net/forum?id= gFXVysXh48K.

[39] X. Zhang, M. Tomizuka, and H. Li. Bridging the sim-to-real gap with dynamic compliance tuning for industrial insertion. arXiv preprint arXiv: Arxiv-2311.07499, 2023.

[40] Y. Chebotar, A. Handa, V. Makoviychuk, M. Macklin, J. Issac, N. Ratliff, and D. Fox. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. arXiv preprint arXiv: Arxiv-1810.05687, 2018.

[41] N. Jakobi, P. Husbands, and I. Harvey. Noise and the reality gap: The use of simulation in evolutionary robotics. In F. Moran, A. Moreno, J. J. Merelo, and P. Chac ´ on, editors, ´ Advances in Artificial Life, pages 704–720, Berlin, Heidelberg, 1995. Springer Berlin Heidelberg. ISBN 978-3-540-49286-3.

[42] E. Tzeng, C. Devin, J. Hoffman, C. Finn, X. Peng, S. Levine, K. Saenko, and T. Darrell. Towards adapting deep visuomotor representations from simulated to real environments. ArXiv, abs/1511.07111, 2015. URL https://api.semanticscholar.org/CorpusID:1541419.

[43] K. Bousmalis, A. Irpan, P. Wohlhart, Y. Bai, M. Kelcey, M. Kalakrishnan, L. Downs, J. Ibarz, P. Pastor, K. Konolige, S. Levine, and V. Vanhoucke. Using simulation and domain adaptation to improve efficiency of deep robotic grasping. arXiv preprint arXiv: Arxiv-1709.07857, 2017.

[44] D. Ho, K. Rao, Z. Xu, E. Jang, M. Khansari, and Y. Bai. Retinagan: An object-aware approach to sim-to-real transfer. arXiv preprint arXiv: Arxiv-2011.03148, 2020.

[45] S. Koos, J.-B. Mouret, and S. Doncieux. Crossing the reality gap in evolutionary robotics by promoting transferable controllers. In Proceedings of the 12th Annual Conference on Genetic and Evolutionary Computation, GECCO ’10, page 119–126, New York, NY, USA, 2010. Association for Computing Machinery. ISBN 9781450300728. doi:10.1145/1830483. 1830505. URL https://doi.org/10.1145/1830483.1830505.

[46] Z. Xie, G. Berseth, P. Clary, J. Hurst, and M. van de Panne. Feedback control for cassie with deep reinforcement learning. arXiv preprint arXiv: Arxiv-1803.05580, 2018.

[47] R. Mart´ın-Mart´ın, M. A. Lee, R. Gardner, S. Savarese, J. Bohg, and A. Garg. Variable impedance control in end-effector space: An action space for reinforcement learning in contact-rich tasks. arXiv preprint arXiv: Arxiv-1906.08880, 2019. [48] J. Wong, V. Makoviychuk, A. Anandkumar, and Y. Zhu. Oscar: Data-driven operational space control for adaptive and robust robot manipulation. arXiv preprint arXiv: Arxiv-2110.00704, 2021.

[49] E. Aljalbout, F. Frank, M. Karl, and P. van der Smagt. On the role of the action space in robot manipulation learning and sim-to-real transfer. arXiv preprint arXiv: Arxiv-2312.03673, 2023.

[50] Y. S. Narang, K. Storey, I. Akinola, M. Macklin, P. Reist, L. Wawrzyniak, Y. Guo, A. Morav ´ anszky, G. State, M. Lu, A. Handa, and D. Fox. Factory: Fast contact for robotic ´ assembly. In K. Hauser, D. A. Shell, and S. Huang, editors, Robotics: Science and Systems XVIII, New York City, NY, USA, June 27 - July 1, 2022, 2022. doi:10.15607/RSS.2022.XVIII. 035. URL https://doi.org/10.15607/RSS.2022.XVIII.035.

[51] L. Ljung. System Identification, pages 163–173. Birkhauser Boston, Boston, MA, 1998. ¨ ISBN 978-1-4612-1768-8. doi:10.1007/978-1-4612-1768-8 11. URL https://doi.org/ 10.1007/978-1-4612-1768-8_11.

[52] P. Chang and T. Padir. Sim2real2sim: Bridging the gap between simulation and real-world in flexible object manipulation. arXiv preprint arXiv: Arxiv-2002.02538, 2020.

[53] X. B. Peng, M. Andrychowicz, W. Zaremba, and P. Abbeel. Sim-to-real transfer of robotic control with dynamics randomization. arXiv preprint arXiv: Arxiv-1710.06537, 2017.

[54] A. Handa, A. Allshire, V. Makoviychuk, A. Petrenko, R. Singh, J. Liu, D. Makoviichuk, K. V. Wyk, A. Zhurkevich, B. Sundaralingam, and Y. S. Narang. Dextreme: Transfer of agile in-hand manipulation from simulation to reality. In IEEE International Conference on Robotics and Automation, ICRA 2023, London, UK, May 29 - June 2, 2023, pages 5977– 5984. IEEE, 2023. doi:10.1109/ICRA48891.2023.10160216. URL https://doi.org/10. 1109/ICRA48891.2023.10160216.

[55] J. Wang, Y. Qin, K. Kuang, Y. Korkmaz, A. Gurumoorthy, H. Su, and X. Wang. Cyberdemo: Augmenting simulated human demonstration for real-world dexterous manipulation. arXiv preprint arXiv: Arxiv-2402.14795, 2024.

[56] G. Schoettler, A. Nair, J. A. Ojea, S. Levine, and E. Solowjow. Meta-reinforcement learning for robotic industrial insertion tasks. arXiv preprint arXiv: Arxiv-2004.14404, 2020.

[57] Y. Zhang, L. Ke, A. Deshpande, A. Gupta, and S. Srinivasa. Cherry-Picking with Reinforcement Learning. In Proceedings of Robotics: Science and Systems, Daegu, Republic of Korea, July 2023. doi:10.15607/RSS.2023.XIX.021.

[58] Y. Chebotar, A. Handa, V. Makoviychuk, M. Macklin, J. Issac, N. D. Ratliff, and D. Fox. Closing the sim-to-real loop: Adapting simulation randomization with real world experience. In International Conference on Robotics and Automation, ICRA 2019, Montreal, QC, Canada, May 20-24, 2019, pages 8973–8979. IEEE, 2019. doi:10.1109/ICRA.2019.8793789. URL https://doi.org/10.1109/ICRA.2019.8793789.

[59] J. P. Hanna and P. Stone. Grounded action transformation for robot learning in simulation. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, AAAI’17, page 4931–4932. AAAI Press, 2017.

[60] E. Heiden, D. Millard, E. Coumans, and G. S. Sukhatme. Augmenting differentiable simulators with neural networks to close the sim2real gap. arXiv preprint arXiv: Arxiv-2007.06045, 2020.

[61] C. A. Cruz and T. Igarashi. A survey on interactive reinforcement learning: Design principles and open challenges. arXiv preprint arXiv: Arxiv-2105.12949, 2021.

[62] Y. Cui, P. Koppol, H. Admoni, S. Niekum, R. Simmons, A. Steinfeld, and T. Fitzgerald. Understanding the relationship between interactions and outcomes in human-in-the-loop machine learning. In Z.-H. Zhou, editor, Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI-21, pages 4382–4391. International Joint Conferences on Artificial Intelligence Organization, 8 2021. doi:10.24963/ijcai.2021/599. URL https://doi.org/10.24963/ijcai.2021/599. Survey Track.

[63] R. Zhang, F. Torabi, L. Guan, D. H. Ballard, and P. Stone. Leveraging human guidance for deep reinforcement learning tasks. arXiv preprint arXiv: Arxiv-1909.09906, 2019.

[64] S. Javdani, S. S. Srinivasa, and J. A. Bagnell. Shared autonomy via hindsight optimization. arXiv preprint arXiv: Arxiv-1503.07619, 2015.

[65] S. Reddy, A. D. Dragan, and S. Levine. Shared autonomy via deep reinforcement learning. arXiv preprint arXiv: Arxiv-1802.01744, 2018.

[66] M. Kelly, C. Sidrane, K. Driggs-Campbell, and M. J. Kochenderfer. Hg-dagger: Interactive imitation learning with human experts. arXiv preprint arXiv: Arxiv-1810.02890, 2018.

[67] A. Mandlekar, D. Xu, R. Mart´ın-Mart´ın, Y. Zhu, L. Fei-Fei, and S. Savarese. Human-in-theloop imitation learning using remote teleoperation. arXiv preprint arXiv: Arxiv-2012.06733, 2020.

[68] A. Mandlekar, D. Xu, J. Wong, S. Nasiriany, C. Wang, R. Kulkarni, L. Fei-Fei, S. Savarese, Y. Zhu, and R. Mart´ın-Mart´ın. What matters in learning from offline human demonstrations for robot manipulation. arXiv preprint arXiv: Arxiv-2108.03298, 2021.

[69] I. Kostrikov, A. Nair, and S. Levine. Offline reinforcement learning with implicit q-learning. arXiv preprint arXiv: Arxiv-2110.06169, 2021.

[70] H. Liu, S. Nasiriany, L. Zhang, Z. Bao, and Y. Zhu. Robot learning on the job: Human-inthe-loop autonomy and learning during deployment. arXiv preprint arXiv: Arxiv-2211.08416, 2022.

[71] A. Abdolmaleki, J. T. Springenberg, Y. Tassa, R. Munos, N. Heess, and M. Riedmiller. Maximum a posteriori policy optimisation. arXiv preprint arXiv: Arxiv-1806.06920, 2018.

[72] D. A. Pomerleau. Alvinn: An autonomous land vehicle in a neural network. In D. Touretzky, editor, Advances in Neural Information Processing Systems, volume 1. Morgan-Kaufmann, 1988. URL https://proceedings.neurips.cc/paper_files/paper/1988/file/ 812b4ba287f5ee0bc9d43bbf5bbe87fb-Paper.pdf.

[73] Y. Zhu, Z. Wang, J. Merel, A. Rusu, T. Erez, S. Cabi, S. Tunyasuvunakool, J. Kramar, R. Had- ´ sell, N. de Freitas, and N. Heess. Reinforcement and imitation learning for diverse visuomotor skills. arXiv preprint arXiv: Arxiv-1802.09564, 2018.

[74] A. Xie, L. Lee, T. Xiao, and C. Finn. Decomposing the generalization gap in imitation learning for visual robotic manipulation. arXiv preprint arXiv: Arxiv-2307.03659, 2023.

[75] Y. Qin, B. Huang, Z.-H. Yin, H. Su, and X. Wang. Dexpoint: Generalizable point cloud reinforcement learning for sim-to-real dexterous manipulation. arXiv preprint arXiv: Arxiv2211.09423, 2022.

[76] O. Khatib. A unified approach for motion and force control of robot manipulators: The operational space formulation. IEEE Journal on Robotics and Automation, 3(1):43–53, 1987. doi:10.1109/JRA.1987.1087068.

[77] J. Nakanishi, R. Cory, M. Mistry, J. Peters, and S. Schaal. Operational space control: A theoretical and empirical comparison. The International Journal of Robotics Research, 27 (6):737–757, 2008. doi:10.1177/0278364908091463. URL https://doi.org/10.1177/ 0278364908091463.

[78] A. A. Rusu, S. G. Colmenarejo, C. Gulcehre, G. Desjardins, J. Kirkpatrick, R. Pascanu, V. Mnih, K. Kavukcuoglu, and R. Hadsell. Policy distillation. arXiv preprint arXiv: Arxiv1511.06295, 2015.

[79] T. Chen, M. Tippur, S. Wu, V. Kumar, E. Adelson, and P. Agrawal. Visual dexterity: In-hand dexterous manipulation from depth. arXiv preprint arXiv: Arxiv-2211.11744, 2022.

[80] T. Chen, J. Xu, and P. Agrawal. A system for general in-hand object re-orientation. In A. Faust, D. Hsu, and G. Neumann, editors, Conference on Robot Learning, 8-11 November 2021, London, UK, volume 164 of Proceedings of Machine Learning Research, pages 297– 307. PMLR, 2021. URL https://proceedings.mlr.press/v164/chen22a.html.

[81] T. Johannink, S. Bahl, A. Nair, J. Luo, A. Kumar, M. Loskyll, J. A. Ojea, E. Solowjow, and S. Levine. Residual reinforcement learning for robot control. arXiv preprint arXiv: Arxiv1812.03201, 2018.

[82] T. Silver, K. Allen, J. Tenenbaum, and L. Kaelbling. Residual policy learning. arXiv preprint arXiv: Arxiv-1812.06298, 2018.

[83] A. Zeng, S. Song, J. Lee, A. Rodriguez, and T. Funkhouser. Tossingbot: Learning to throw arbitrary objects with residual physics. arXiv preprint arXiv: Arxiv-1903.11239, 2019.

[84] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv: Arxiv-1707.06347, 2017.

[85] C. Chi, S. Feng, Y. Du, Z. Xu, E. Cousineau, B. Burchfiel, and S. Song. Diffusion policy: Visuomotor policy learning via action diffusion. In K. E. Bekris, K. Hauser, S. L. Herbert, and J. Yu, editors, Robotics: Science and Systems XIX, Daegu, Republic of Korea, July 10-14, 2023, 2023. doi:10.15607/RSS.2023.XIX.026. URL https://doi.org/10.15607/RSS. 2023.XIX.026.

[86] C. R. Qi, H. Su, K. Mo, and L. J. Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. arXiv preprint arXiv: Arxiv-1612.00593, 2016.

[87] A. Jaegle, F. Gimeno, A. Brock, A. Zisserman, O. Vinyals, and J. Carreira. Perceiver: General perception with iterative attention. arXiv preprint arXiv: Arxiv-2103.03206, 2021.

[88] J. Lee, Y. Lee, J. Kim, A. R. Kosiorek, S. Choi, and Y. W. Teh. Set transformer: A framework for attention-based permutation-invariant neural networks. arXiv preprint arXiv: Arxiv1810.00825, 2018.

[89] I. Loshchilov and F. Hutter. Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv: Arxiv-1608.03983, 2016.

[90] M. Heo, Y. Lee, D. Lee, and J. J. Lim. Furniturebench: Reproducible real-world benchmark for long-horizon complex manipulation. In K. E. Bekris, K. Hauser, S. L. Herbert, and J. Yu, editors, Robotics: Science and Systems XIX, Daegu, Republic of Korea, July 10-14, 2023, 2023. doi:10.15607/RSS.2023.XIX.041. URL https://doi.org/10.15607/RSS.2023. XIX.041.

[91] A. Fishman, A. Murali, C. Eppner, B. Peele, B. Boots, and D. Fox. Motion policy networks. In K. Liu, D. Kulic, and J. Ichnowski, editors, Conference on Robot Learning, CoRL 2022, 14-18 December 2022, Auckland, New Zealand, volume 205 of Proceedings of Machine Learning Research, pages 967–977. PMLR, 2022. URL https://proceedings.mlr.press/v205/ fishman23a.html.

[92] R. Hoque, A. Balakrishna, E. Novoseller, A. Wilcox, D. S. Brown, and K. Goldberg. Thriftydagger: Budget-aware novelty and risk gating for interactive imitation learning. arXiv preprint arXiv: Arxiv-2109.08273, 2021.

[93] R. Tedrake and the Drake Development Team. Drake: Model-based design and verification for robotics, 2019. URL https://drake.mit.edu.

[94] F. Xiang, Y. Qin, K. Mo, Y. Xia, H. Zhu, F. Liu, M. Liu, H. Jiang, Y. Yuan, H. Wang, L. Yi, A. X. Chang, L. J. Guibas, and H. Su. Sapien: A simulated part-based interactive environment. arXiv preprint arXiv: Arxiv-2003.08515, 2020.

[95] M. Mittal, C. Yu, Q. Yu, J. Liu, N. Rudin, D. Hoeller, J. L. Yuan, P. P. Tehrani, R. Singh, Y. Guo, H. Mazhar, A. Mandlekar, B. Babich, G. State, M. Hutter, and A. Garg. Orbit: A unified simulation framework for interactive robot learning environments. arXiv preprint arXiv: Arxiv-2301.04195, 2023.

[96] A. Zeng, P. Florence, J. Tompson, S. Welker, J. Chien, M. Attarian, T. Armstrong, I. Krasin, D. Duong, A. Wahid, V. Sindhwani, and J. Lee. Transporter networks: Rearranging the visual world for robotic manipulation. arXiv preprint arXiv: Arxiv-2010.14406, 2020.

[97] M. Shridhar, L. Manuelli, and D. Fox. Cliport: What and where pathways for robotic manipulation. arXiv preprint arXiv: Arxiv-2109.12098, 2021.

[98] Y. Jiang, A. Gupta, Z. Zhang, G. Wang, Y. Dou, Y. Chen, L. Fei-Fei, A. Anandkumar, Y. Zhu, and L. Fan. Vima: General robot manipulation with multimodal prompts. arXiv preprint arXiv: Arxiv-2210.03094, 2022.

[99] M. Shridhar, L. Manuelli, and D. Fox. Perceiver-actor: A multi-task transformer for robotic manipulation. arXiv preprint arXiv: Arxiv-2209.05451, 2022.

[100] D. Batra, A. X. Chang, S. Chernova, A. J. Davison, J. Deng, V. Koltun, S. Levine, J. Malik, I. Mordatch, R. Mottaghi, M. Savva, and H. Su. Rearrangement: A challenge for embodied ai. arXiv preprint arXiv: Arxiv-2011.01975, 2020.

[101] J. Gu, D. S. Chaplot, H. Su, and J. Malik. Multi-skill mobile manipulation for object rearrangement. arXiv preprint arXiv: Arxiv-2209.02778, 2022.

[102] S. Yenamandra, A. Ramachandran, K. Yadav, A. Wang, M. Khanna, T. Gervet, T.-Y. Yang, V. Jain, A. W. Clegg, J. Turner, Z. Kira, M. Savva, A. Chang, D. S. Chaplot, D. Batra, R. Mottaghi, Y. Bisk, and C. Paxton. Homerobot: Open-vocabulary mobile manipulation. arXiv preprint arXiv: Arxiv-2306.11565, 2023.

[103] K. Ehsani, T. Gupta, R. Hendrix, J. Salvador, L. Weihs, K.-H. Zeng, K. P. Singh, Y. Kim, W. Han, A. Herrasti, R. Krishna, D. Schwenk, E. VanderBilt, and A. Kembhavi. Imitating shortest paths in simulation enables effective navigation and manipulation in the real world. arXiv preprint arXiv: Arxiv-2312.02976, 2023.

[104] Y. Wu, W. Yan, T. Kurutach, L. Pinto, and P. Abbeel. Learning to manipulate deformable objects without demonstrations. arXiv preprint arXiv: Arxiv-1910.13439, 2019.

[105] H. Ha and S. Song. Flingbot: The unreasonable effectiveness of dynamic manipulation for cloth unfolding. arXiv preprint arXiv: Arxiv-2105.03655, 2021.

[106] D. Seita, Y. Wang, S. J. Shetty, E. Y. Li, Z. Erickson, and D. Held. Toolflownet: Robotic manipulation with tools via predicting tool flow from point clouds. arXiv preprint arXiv: Arxiv-2211.09006, 2022.

[107] X. Lin, Z. Huang, Y. Li, J. B. Tenenbaum, D. Held, and C. Gan. Diffskill: Skill abstraction from differentiable physics for deformable object manipulations with tools. arXiv preprint arXiv: Arxiv-2203.17275, 2022.

[108] Y. Ji, Z. Li, Y. Sun, X. B. Peng, S. Levine, G. Berseth, and K. Sreenath. Hierarchical reinforcement learning for precise soccer shooting skills using a quadrupedal robot. arXiv preprint arXiv: Arxiv-2208.01160, 2022.

[109] Y. J. Ma, W. Liang, G. Wang, D.-A. Huang, O. Bastani, D. Jayaraman, Y. Zhu, L. Fan, and A. Anandkumar. Eureka: Human-level reward design via coding large language models. arXiv preprint arXiv: Arxiv-2310.12931, 2023.

[110] A. Boeing and T. Braunl. Leveraging multiple simulators for crossing the reality gap. In ¨ 2012 12th International Conference on Control Automation Robotics & Vision (ICARCV), pages 1113–1119, 2012. doi:10.1109/ICARCV.2012.6485313.

[111] J. Hwangbo, J. Lee, A. Dosovitskiy, D. Bellicoso, V. Tsounis, V. Koltun, and M. Hutter. Learning agile and dynamic motor skills for legged robots. Science Robotics, 4(26):eaau5872, 2019. doi:10.1126/scirobotics.aau5872. URL https://www.science.org/doi/abs/10. 1126/scirobotics.aau5872.

[112] J. Luo, E. Solowjow, C. Wen, J. A. Ojea, A. M. Agogino, A. Tamar, and P. Abbeel. Reinforcement learning on variable impedance controller for high-precision robotic assembly. In International Conference on Robotics and Automation, ICRA 2019, Montreal, QC, Canada, May 20-24, 2019, pages 3080–3087. IEEE, 2019. doi:10.1109/ICRA.2019.8793506. URL https://doi.org/10.1109/ICRA.2019.8793506.

[113] P. C. Horak and J. C. Trinkle. On the similarities and differences among contact models in robot simulation. IEEE Robotics and Automation Letters, 4(2):493–499, 2019. doi:10.1109/ LRA.2019.2891085.

[114] C. Finn, P. Abbeel, and S. Levine. Model-agnostic meta-learning for fast adaptation of deep networks. arXiv preprint arXiv: Arxiv-1703.03400, 2017.

[115] J. A. Fails and D. R. Olsen. Interactive machine learning. In Proceedings of the 8th International Conference on Intelligent User Interfaces, IUI ’03, page 39–45, New York, NY, USA, 2003. Association for Computing Machinery. ISBN 1581135866. doi:10.1145/604045. 604056. URL https://doi.org/10.1145/604045.604056.

[116] S. Amershi, M. Cakmak, W. B. Knox, and T. Kulesza. Power to the people: The role of humans in interactive machine learning. AI Magazine, 35(4):105–120, Dec. 2014. doi:10.1609/aimag.v35i4.2513. URL https://ojs.aaai.org/aimagazine/index.php/ aimagazine/article/view/2513.

[117] Y. Yue, J. Broder, R. Kleinberg, and T. Joachims. The k-armed dueling bandits problem. Journal of Computer and System Sciences, 78(5):1538–1556, 2012. ISSN 0022-0000. doi:https://doi.org/10.1016/j.jcss.2011.12.028. URL https://www.sciencedirect.com/ science/article/pii/S0022000012000281. JCSS Special Issue: Cloud Computing 2011.

[118] A. Jain, B. Wojcik, T. Joachims, and A. Saxena. Learning trajectory preferences for manipulators via iterative improvement. arXiv preprint arXiv: Arxiv-1306.6294, 2013.

[119] P. Christiano, J. Leike, T. B. Brown, M. Martic, S. Legg, and D. Amodei. Deep reinforcement learning from human preferences. arXiv preprint arXiv: Arxiv-1706.03741, 2017.

[120] E. Bıyık, D. P. Losey, M. Palan, N. C. Landolfi, G. Shevchuk, and D. Sadigh. Learning reward functions from diverse sources of human feedback: Optimally integrating demonstrations and preferences. arXiv preprint arXiv: Arxiv-2006.14091, 2020.

[121] K. Lee, L. Smith, and P. Abbeel. Pebble: Feedback-efficient interactive reinforcement learning via relabeling experience and unsupervised pre-training. arXiv preprint arXiv: Arxiv2106.05091, 2021.

[122] X. Wang, K. Lee, K. Hakhamaneshi, P. Abbeel, and M. Laskin. Skill preferences: Learning to extract and execute robotic skills from human feedback. arXiv preprint arXiv: Arxiv2108.05382, 2021.

[123] L. Ouyang, J. Wu, X. Jiang, D. Almeida, C. L. Wainwright, P. Mishkin, C. Zhang, S. Agarwal, K. Slama, A. Ray, J. Schulman, J. Hilton, F. Kelton, L. Miller, M. Simens, A. Askell, P. Welinder, P. Christiano, J. Leike, and R. Lowe. Training language models to follow instructions with human feedback. arXiv preprint arXiv: Arxiv-2203.02155, 2022.

[124] V. Myers, E. Bıyık, and D. Sadigh. Active reward learning from online preferences. arXiv preprint arXiv: Arxiv-2302.13507, 2023.

[125] R. Rafailov, A. Sharma, E. Mitchell, S. Ermon, C. D. Manning, and C. Finn. Direct preference optimization: Your language model is secretly a reward model. arXiv preprint arXiv: Arxiv2305.18290, 2023.

[126] J. Hejna, R. Rafailov, H. Sikchi, C. Finn, S. Niekum, W. B. Knox, and D. Sadigh. Contrastive preference learning: Learning from human feedback without rl. arXiv preprint arXiv: Arxiv2310.13639, 2023.

[127] W. B. Knox and P. Stone. Reinforcement learning from human reward: Discounting in episodic tasks. In 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, pages 878–885, 2012. doi:10.1109/ROMAN.2012. 6343862.

[128] B. D. Argall, E. L. Sauser, and A. G. Billard. Tactile guidance for policy refinement and reuse. In 2010 IEEE 9th International Conference on Development and Learning, pages 7–12, 2010. doi:10.1109/DEVLRN.2010.5578872.

[129] T. Fitzgerald, E. Short, A. Goel, and A. Thomaz. Human-guided trajectory adaptation for tool transfer. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, AAMAS ’19, page 1350–1358, Richland, SC, 2019. International Foundation for Autonomous Agents and Multiagent Systems. ISBN 9781450363099.

[130] A. V. Bajcsy, D. P. Losey, M. K. O’Malley, and A. D. Dragan. Learning robot objectives from physical human interaction. In Conference on Robot Learning, 2017. URL https: //api.semanticscholar.org/CorpusID:28406224.

[131] A. Najar, O. Sigaud, and M. Chetouani. Interactively shaping robot behaviour with unlabeled human instructions. arXiv preprint arXiv: Arxiv-1902.01670, 2019.

[132] N. Wilde, E. Bıyık, D. Sadigh, and S. L. Smith. Learning reward functions from scale feedback. arXiv preprint arXiv: Arxiv-2110.00284, 2021.

[133] J. Zhang and K. Cho. Query-efficient imitation learning for end-to-end autonomous driving. arXiv preprint arXiv: Arxiv-1605.06450, 2016.

[134] W. Saunders, G. Sastry, A. Stuhlmueller, and O. Evans. Trial without error: Towards safe reinforcement learning via human intervention. arXiv preprint arXiv: Arxiv-1707.05173, 2017.

[135] Z. Wang, X. Xiao, B. Liu, G. Warnell, and P. Stone. Appli: Adaptive planner parameter learning from interventions. arXiv preprint arXiv: Arxiv-2011.00400, 2020.

[136] C. Celemin and J. R. del Solar. An interactive framework for learning continuous actions policies based on corrective feedback. Journal of Intelligent & Robotic Systems, 95:77– 97, 2018. doi:10.1007/s10846-018-0839-z. URL http://link.springer.com/article/ 10.1007/s10846-018-0839-z/fulltext.html.

[137] Z. Peng, W. Mo, C. Duan, Q. Li, and B. Zhou. Learning from active human involvement through proxy value propagation. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=q8SukwaEBy.

[138] C. Celemin, R. Perez-Dattari, E. Chisari, G. Franzese, L. de Souza Rosa, R. Prakash, ´ Z. Ajanovic, M. Ferraz, A. Valada, and J. Kober. Interactive imitation learning in robotics: A ´ survey. arXiv preprint arXiv: Arxiv-2211.00600, 2022.

[139] R. Hoque, A. Mandlekar, C. R. Garrett, K. Goldberg, and D. Fox. Interventional data generation for robust and data-efficient robot imitation learning. In First Workshop on Out-ofDistribution Generalization in Robotics at CoRL 2023, 2023. URL https://openreview. net/forum?id=ckFRoOaA3n.

[140] J. Crandall and M. Goodrich. Characterizing efficiency of human robot interaction: a case study of shared-control teleoperation. In IEEE/RSJ International Conference on Intelligent Robots and Systems, volume 2, pages 1290–1295 vol.2, 2002. doi:10.1109/IRDS.2002. 1043932.

[141] A. D. Dragan and S. S. Srinivasa. A policy-blending formalism for shared control. The International Journal of Robotics Research, 32(7):790–805, 2013. doi:10.1177/ 0278364913490324. URL https://doi.org/10.1177/0278364913490324.

[142] D. Gopinath, S. Jain, and B. D. Argall. Human-in-the-loop optimization of shared autonomy in assistive robotics. IEEE Robotics and Automation Letters, 2(1):247–254, 2017. doi:10. 1109/LRA.2016.2593928.

[143] A. D. Dragan and S. S. Srinivasa. Formalizing assistive teleoperation, volume 376. MIT Press, July, 2012.

[144] H. J. Jeon, D. P. Losey, and D. Sadigh. Shared autonomy with learned latent actions. arXiv preprint arXiv: Arxiv-2005.03210, 2020.

[145] Y. Cui, S. Karamcheti, R. Palleti, N. Shivakumar, P. Liang, and D. Sadigh. ”no, to the right” – online language corrections for robotic manipulation via shared autonomy. arXiv preprint arXiv: Arxiv-2301.02555, 2023.

[146] P. Wu, Y. Shentu, Z. Yi, X. Lin, and P. Abbeel. Gello: A general, low-cost, and intuitive teleoperation framework for robot manipulators. arXiv preprint arXiv: Arxiv-2309.13037, 2023.

[147] C. Wang, H. Shi, W. Wang, R. Zhang, L. Fei-Fei, and C. K. Liu. Dexcap: Scalable and portable mocap data collection system for dexterous manipulation. arXiv preprint arXiv: Arxiv-2403.07788, 2024.

[148] Z. Fu, T. Z. Zhao, and C. Finn. Mobile aloha: Learning bimanual mobile manipulation with low-cost whole-body teleoperation. arXiv preprint arXiv: Arxiv-2401.02117, 2024.

[149] S. Dass, W. Ai, Y. Jiang, S. Singh, J. Hu, R. Zhang, P. Stone, B. Abbatematteo, and R. Mart´ınMart´ın. Telemoma: A modular and versatile teleoperation system for mobile manipulation. arXiv preprint arXiv: Arxiv-2403.07869, 2024.

[150] T. He, Z. Luo, W. Xiao, C. Zhang, K. Kitani, C. Liu, and G. Shi. Learning human-tohumanoid real-time whole-body teleoperation. arXiv preprint arXiv: Arxiv-2403.04436, 2024.

[151] H. Liu, S. Dass, R. Mart´ın-Mart´ın, and Y. Zhu. Model-based runtime monitoring with interactive imitation learning. arXiv preprint arXiv: Arxiv-2310.17552, 2023.

[152] Z. Liu, A. Bahety, and S. Song. Reflect: Summarizing robot experiences for failure explanation and correction. arXiv preprint arXiv: Arxiv-2306.15724, 2023.

[153] L. Ankile, A. Simeonov, I. Shenfeld, and P. Agrawal. Juicer: Data-efficient imitation learning for robotic assembly. arXiv preprint arXiv: Arxiv-2404.03729, 2024.

[154] M. N. Mistry and L. Righetti. Operational space control of constrained and underactuated systems. In Robotics: Science and Systems, 2011. URL https://api.semanticscholar. org/CorpusID:10392712.

[155] L. Fan, G. Wang, Y. Jiang, A. Mandlekar, Y. Yang, H. Zhu, A. Tang, D.-A. Huang, Y. Zhu, and A. Anandkumar. Minedojo: Building open-ended embodied agents with internet-scale knowledge. arXiv preprint arXiv: Arxiv-2206.08853, 2022.

[156] D.-A. Clevert, T. Unterthiner, and S. Hochreiter. Fast and accurate deep network learning by exponential linear units (elus). arXiv preprint arXiv: Arxiv-1511.07289, 2015.

[157] D. Makoviichuk and V. Makoviychuk. rl-games: A high-performance framework for reinforcement learning. https://github.com/Denys88/rl_games, May 2021.

[158] J. Schulman, P. Moritz, S. Levine, M. Jordan, and P. Abbeel. High-dimensional continuous control using generalized advantage estimation. arXiv preprint arXiv: Arxiv-1506.02438, 2015.

[159] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv: Arxiv-1412.6980, 2014.

Authors:

(1) Yunfan Jiang, Department of Computer Science;

(2) Chen Wang, Department of Computer Science;

(3) Ruohan Zhang, Department of Computer Science and Institute for Human-Centered AI (HAI);

(4) Jiajun Wu, Department of Computer Science and Institute for Human-Centered AI (HAI);

(5) Li Fei-Fei, Department of Computer Science and Institute for Human-Centered AI (HAI).

This paper is